Last updated: July 30th at 00:00 CEST

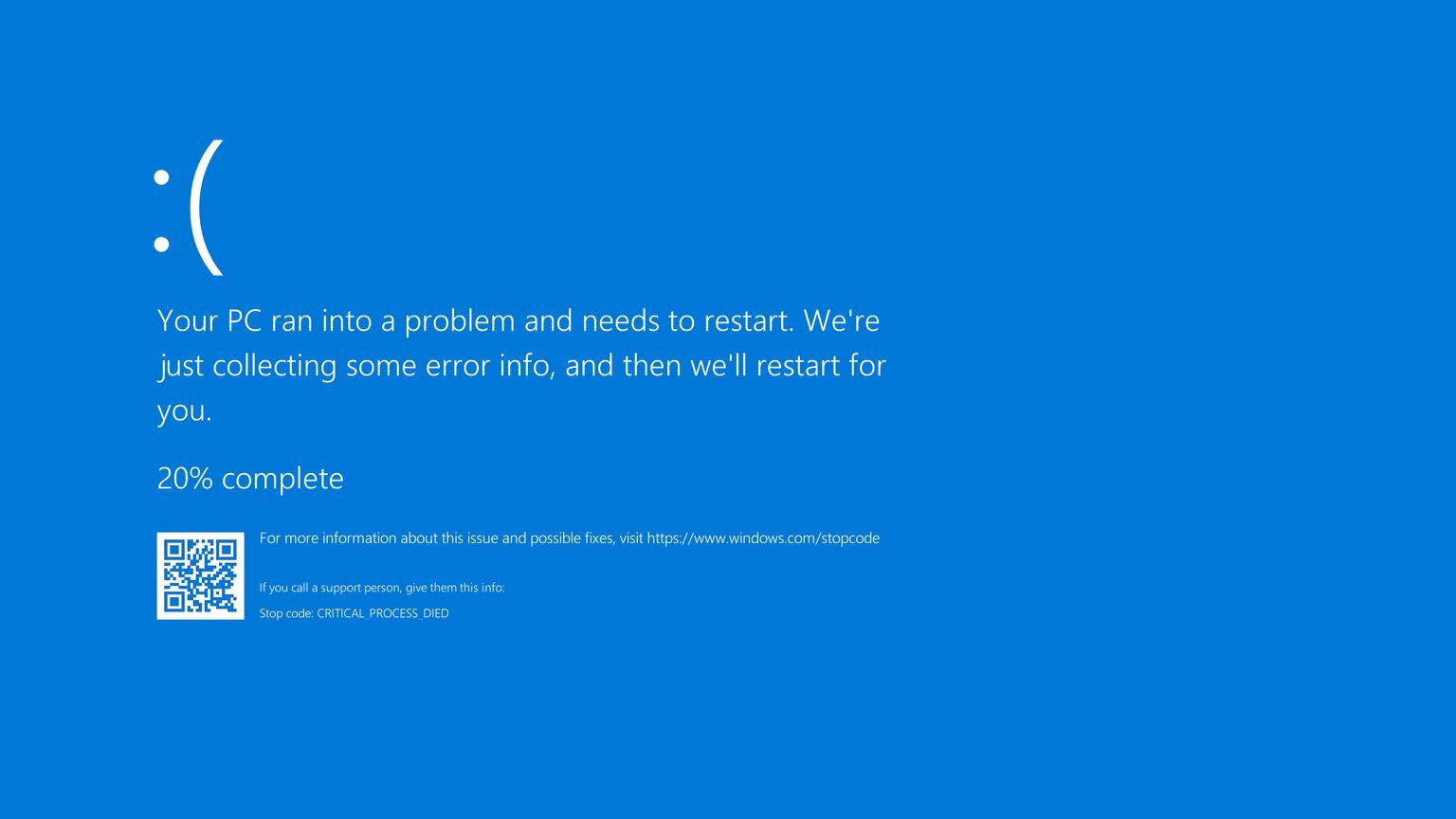

In this blog, we provided real-time updates on the blue screen of death (BSOD) issue caused by a CrowdStrike Falcon content update on the 19th of July 2024.

This blog is not being updated anymore.

Phishing attacks: CrowdStrike has observed phishing attacks targeting customers and contacts, with attackers pretending to be from CrowdStrike Support and offering unsolicited help. These messages are malicious; CrowdStrike Support will never proactively reach out without prior contact.

Summary

CrowdStrike has released a lot of information over the past few days regarding the incident that crashed Windows hosts caused by a faulty Content Channel update of the CrowdStrike Falcon Sensor.

- Link to CrowdStrike's remediation and guidance hub, containing

- Technical Details

- Recovery Options

- Official Statement

- Preliminary Post Incident Review

- Link to CrowdStrike's blog with technical details

Details

Affected Windows hosts experience a blue screen of death (BSOD) related to the Falcon Sensor.

CrowdStrike Engineering has identified a content deployment update related to this issue that was pushed at 4:09 AM UTC on July 19th 2024 and reverted those changes. As a result, hosts that booted up after 5:27 AM UTC on July 19th 2024 should not experience any issues.

This issue is not impacting Mac- or Linux-based hosts.

Remediation Steps

WARNING: Do not uninstall CrowdStrike or follow the steps below if your systems are not currently having issues.

Automated Recovery

An automated solution is available. This remediation uses Falcon’s existing built-in quarantine functionality which will remove the problematic channel file before the file causes a system crash on affected hosts. It is only applicable to affected hosts that can establish a network connection to the CrowdStrike cloud. This solution was enabled for all customers in EU-1, US-1 and US-2 regions on the 23rd of July. Simply rebooting a system multiple times may remediate the system if the system can reach the CrowdStrike cloud. This has a higher chance of success if the system uses a stable internet connection via a wired connection. If the system still crashes after multiple reboots, manual recovery is required.

Manual Recovery

CrowdStrike has published a video to guide you through the manual recovery process for remediating individual hosts:

For remediating large amounts of hosts options are available to create bootable media which will repair the systems in question. For details please check the CrowdStrike Remediation and Guidance Hub under 'How Do I Remediate Impacted Hosts'. This page also contains information on how to deal with BitLocker-encrypted hosts.

Manual Recovery Steps to follow on a per-system basis if these are stuck at a reboot loop with a Blue Screen

Update: We first recommended booting into Safe Mode (per the official CrowdStrike recommendation), but many customers reported problems with booting into Safe Mode. The following steps should work universally, also if the system does not have a local Admin account and without an internet connection.

- Let the system boot up and crash three times, this will give you a menu.

- Click Troubleshoot

- Click Advanced Options

- Click Command Prompt

- If your system is protected with BitLocker, you will need to enter your BitLocker Recovery Key

- If BitLocker is managed via Intune, this can be found at https://myaccount.microsoft.com, under "devices". Make sure to match the Hostname of the device and the Key ID

- Otherwise, ask your local IT administrator for your BitLocker Recovery Key

- In the command prompt window, type the following commands, followed by an Enter key.

- Warning: The Command prompt starts at the X:\ drive. Please do not forget to switch to c:\ by typing these commands exactly. If the C:-drive is not available some users report having to adjust BIOS settings.

- c:

- cd windows

- cd system32

- cd drivers

- cd crowdstrike

- del C-00000291*

- exit

- Click continue to Windows

Steps for public cloud or similar environment including Virtual Machines

Option 1:

- Detach the operating system disk volume from the impacted virtual server

- Create a snapshot or backup of the disk volume before proceeding further as a precaution against unintended changes

- Attach/mount the volume to to a new virtual server

- Navigate to the C:\Windows\System32\drivers\CrowdStrike directory

- Locate the file matching “C-00000291*.sys”, and delete it.

- Detach the volume from the new virtual server

- Reattach the fixed volume to the impacted virtual server

- Roll back to a snapshot before 0409 UTC.

Steps for repairing VM's per cloud provider

If the above steps do not help in solving the issues, these are the steps provided by the three biggest cloud providers for resolving the issue on Virtual Machines on their platform.

AWS

https://aka.ms/CSfalcon-VMRecoveryOptions

Googlehttps://status.cloud.google.com/incidents/DK3LfKowzJPpZq4Q9YqP

Recovering without a BitLocker recovery key

CrowdStrike has described a way to work around the situation where a BitLocker recovery key is not available. Note this still requires credentials with local administrator privileges. The knowledge base article describing these steps is available here: https://www.crowdstrike.com/wp-content/uploads/2024/07/BitLocker-recovery-without-recovery-keys-2.0.pdf

Frequently Asked Questions

Would following these steps compromise my security?

No. After following the steps above, CrowdStrike will resume normal operations on the system and your systems are still protected.

Is this caused by a cyber attack?

CrowdStrike has identified the root cause of the faulty update as a defect in a content update. There is no indication this has originated from a cyber attack.

Do I need to uninstall CrowdStrike?

If your systems have booted up and are (back) online, there is no need to uninstall CrowdStrike.

During the first hours of the incident we temporarily made it possible to uninstall CrowdStrike for our customers by disabling Uninstall Protection. It has since been re-enabled as CrowdStrike is no longer pushing the update that is causing the issues. Uninstalling CrowdStrike is not recommended at this point as a fix is already available and will put your systems at risk.

Are any other solutions available?

We have seen reports of users applying a PXE-boot based solution [1], [2]. This can be an effective solution if you have a large amount of systems affected. Note these solutions are experimental, complicated and have a lot of prerequisites (like the hosts being on a network with a PXE server). Most smaller companies will most likely find the manual solutions more effective. For Eye customers we advise to contact support before pursuing this option.

Others reported that rebooting a system 15 times [3] allows the system to recover. It is unknown if the CrowdStrike software is still functioning after performing these steps, as this may prevent the drivers from loading entirely.

Finally, Both CrowdStrike [4] and Microsoft [5] have released a USB Recovery Tool to help IT Admins expedite the repair process.

I have the file still on my system, will I be impacted?

- Channel file "C-00000291*.sys" with timestamp of 0527 UTC or later is the reverted (good) version.

- Channel file "C-00000291*.sys" with timestamp of 0409 UTC is the problematic version.

How could this have happened?

CrowdStrike has released a Preliminary Post Incident Review, in which they explain the technical details behind the faulty update that caused this massive outage. It can be found in their remediation and guidance hub.

PR Coverage

- Reuters

- BBC | Mass outage affects airlines, media and banks

- The Guardian | Banks, airlines and media outlets hit by global outage linked to Windows PCs

- Die Welt (German) | Flughäfen, Dax-Unternehmen, Krankenhäuser – Das ist der Fehler, der die Welt lahm legt

- Het Financieele Dagblad (Dutch) - with comments from our CEO Job Kuijpers | Kwetsbaarheid IT-beveiliging onder vergrootglas na wereldwijde storing

- NPO Radio 1 (Dutch) - with our Chief Hacker Vaisha Bernard - Welke lessen kunnen we trekken uit de computerstoring van CrowdStrike

Latest Updates

- 2024-07-19 05:30 UTC | Tech Alert Published.

- 2024-07-19 06:30 UTC | Updated and added workaround details.

- 2024-07-19 07:08 UTC | CrowdStrike Engineering has identified a content deployment related to this issue and reverted those changes.

- 2024-07-19 07:32 UTC | Added PR coverage

- 2024-07-19 07:45 UTC | Added fix for people using Windows systems that are stuck in a reboot loop

- 2024-07-19 08:06 UTC | Added the advice not to uninstall CrowdStrike at this point as a fix is available.

- 2024-07-19 08:35 UTC | Slightly updated the fix steps

- 2024-07-19 09:07 UTC | Added answers to common questions

- 2024-07-19 09:33 UTC | Added steps to follow for public cloud virtual machines

- 2024-07-19 09:46 UTC | Added steps to follow for Azure

- 2024-07-19 10:34 UTC | Added answers to FAQ

- 2024-07-19 11:07 UTC | Added steps for Virtual Machines per cloud provider

- 2024-07-20 07:58 UTC | Added link to CrowdStrike blogpost with technical details

- 2024-07-20 09:56 UTC | Added FAQ entry about PXE options

- 2024-07-20 11:48 UTC | Added 15 reboot option to FAQ

- 2024-07-20 14:58 UTC | Added FAQ entry about recovery without BitLocker keys

- 2024-07-21 09:56 UTC | Added USB Repair tool, updated cloud repair links

- 2024-07-21 12:21 UTC | Added link to CrowdStrike's remediation and guidance hub

- 2024-07-23 08:02 UTC | Added Youtube video, linked to Remediation Hub contect, clarified some topics

- 2024-07-23 19:02 UTC | Added details about automated remediation

- 2024-07-23 06:38 UTC | Added information about the Preliminary Post Incident Review

- 2024-07-30 00:00 UTC | Incident scaled down, live blog archived